Hope not but will see.

One reason why nvidea currently has a $1B valuation.

get your gpus now guys, when AI takes over everything, they will likely outsource to rando AI farms

I already know guys that have converted their mining farms if 100+ GPUs to AI Processing Farms…

And one of them powers the AI behind the simple “gimmie a description and I’ll draw you a picture” app.

Saw this yesterday along with an uptick of rumors about Radeon skipping the 8000 series high end cards.

Most likely pulling the trigger on a 4070Ti in the next few days,

I’ll post the same thing here that I posted there in that regard.

AMD 7K / Navi 31 Cleaned up the infinity fabric to the point where chiplets were viable, but IMHO not ready.

AMD wanted to go all in with chip-lets for Navi 3x, so they proceeded to product them anyway.

Navi 41 and 42, if scrapped likely is because the power draw and yields simply aren’t worth it.

Yes nVidia would have free run of the top tier, top tier is a 'nich market, and the bulk of the market share is in mainstream and e-sports.

Here’s the interesting part that alot of tech pages and influencers so easily forgot.

CHIPLETS.

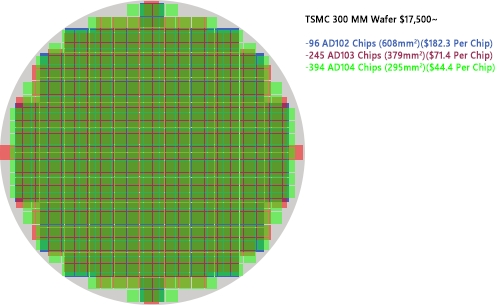

Now, Lets look at this chart I made for RTX 40

(Hypothetically) if Navi 41 and 42 would be equivalent in size to AD103 and AD104.

A Typical TSMC 300 MM wafer is around $17,500 (might have gone up north of 20K for 2024).

Look at the chip sizes and yields, and that’s a perfect wafer, which doesn’t exist.

So we’ll break this down to a lower number to generalize the seriousness of large GPU Chips.

For Examples Sake, we say NV41 is 500mm²

Say that Wafer has a yield of 100, 500mm² Chips w/ 10 surface defects,

So a total usable yield of 90 chips.

Now, lets say since AMD re-uses chip-lets for memory and IO controllers separately, they produce 250mm² Graphics Core chip-lets w/ roughly 40% of the performance of that one large 500mm2 chip.

For Examples Sake we’ll call it NV44

with the same size wafer, you now have 400 chiplets w/ 10 defects taking it down to 390.

Now, lets assume the next gen Infinity Fabric interconnect fixes the Navi3x Problems.

AMD can Take those 390 Chiplets and arrange them on the infinity fabric in multiple configurations.

2 or 3 Chip-lets, w/ Flanking MCDs and I/O Chiplets, and a New AI Accelerator Chiplet

Assuming Chip-lets function correctly,

AMD can produce a SINGLE Family Chiplet, and scale them the same way they do on the Ryzen CPUs, by adding the GCD Chiplets in Groups vs 1 large GPU where EVERYTHING (MCD, I/O, PCIe, DISPLAY VPU, RT/AI CORES etc) are all tapped onto the same chip.

If the Chiplets are separated, the GCDs, MCDs, I/O, PCIe, VPU, AI Cores, can all be fabricated separately, GCDs using the latest fabrication, and MCD, etc all using Matured fabrication samples.

You can literally build different tiers of Graphics Cards with the same parts.

Assuming we are using higher Density GDDRx Chips at 32-Bits per chip and at least 4GB Chips

1x NV44 / 1x 64-Bit MCDs 8 GB would be for E-Sports, 40% of the Performance of a NV41

2x NV44 / 2x 64-Bit MCDs 16 GB would be Entry Mainstream, 80% of the Performance of a NV41

3x NV44 / 3x 64-Bit MCDs 24 GB would be High Mainstream, 120% of the Performance of a NV41

4x NV44 / 4x 64-Bit MCDs 32 GB would be for Enthusiasts, 160% of the Performance of a NV41

Remember, they maybe 1/4 the size, but since the other components are fabricated separately, they retain 40% of the performance per mm² (shoot, even if it’s 30%, it’s still 30/60/90/120%)

NV41/42 Might have been cancelled, but everyone so quickly forgot Ryzen 1K and 2K introduced Chiplets, Ryzen 3K matured it and Ryzen 5K/7K prove the system works.

Navi 31/32 was rushed imho, they didnt mature the chiplets and infinity fabric enough for graphics and it showed.

Now, The Point of this is that, you dont need a Big honkin’ wafer eating chip that costs $1200 to manufacture when you can fabricate chips at $200 each and use them in all you products as the industry demands (1-2-3-4) even linking 4 Chiplets would be cheaper than 1 large die.

NV41, per wafer you’d get 90 GPUs and maybe 10 cut down GPUs.

NV44, per wafer if you wanted all High End cards w/ 4 Chiplets each, you’d get 97.

Extrapolate that over an entire fabrication contract.

and those NV44s would be useable in all tier GPUs not just the top tier.

But you’d also only have to research and tap out 1 or 2 designs vs 4,

You’d also only have to sign a contract for a single or 2 fabrication lines vs 4.

2 Fab lines lasing out 800 NV44s vs 2 fabrication line shoving out 200 NV41s in the same turn around time and those 800 NV43s can be used to make all the tiers via infinity fabric interconnect and chiplet designs.

I’m not sure, but feel that I’m in the midst of genius.

Oh Forgot to mention,

TSMC’s 3nm Process, will have a limit of 400mm²,

So nVidia would have to not produce their x080 and x090 series on 3NM if they wanted to continue to produce them, when asked about it, nVidia stated that they are changing company direction over the next few years to AI from Gaming.

I just up-viper and act like I understand…

So if I understand correctly - ‘something, something, alchemy, something, something, magic, something,something, makes my video card work, something, something, AI is the new crypto miner and will consume all the tasty chips that the wizards cooked.’?

Thanks @SkateZilla that’s a good read. Safe to say we may have hit the threshold of gain vs price?

Slim chances for a brilliant @Harry_Bumcrack DCS Updater Utility software anytime soon, I take it…? ![]()

“Any sufficiently advanced technology is indistinguishable from magic.”

I remember our Aircraft General teacher at flightschool used to answer ”It’s magic. You don’t need to know how it works, just how you work it” when eager beaver pilot wannabees asked questions that he didn’t have time to explain the complicated answer to.

Thats funny!

I use the Its magic line all the time.

Yesterday my son asked me how babies get into the womb.

He did not accept the “magic” answer and he knew about the Stork.

Almost lost myself in details. Let’s just say he stopped asking questions.

Looking at @chipwich’s article above, I too am all out of questions. I should probably start saving money?

So if I understood correctly, you are saying:

Moving from big GPU dies to more small chiplets will drastically increase yields on the high-end GPUs.

Thus, it will become cheaper to create powerful GPUs.

But… AI developments will or are already drastically increasing demand.

So are you saying:

- We don’t know if prices for high-end GPUs will go up or down?

- Prices will still go up, but AMD will profit hugely?

- AMD will undercut Nvidia in the medium and later high tiers of gaming GPUs?

Or something else entirely?

Also, I was just looking at upgrading from RTX 3080 to an RX 7900 XT, as prices have come down a bit for the new ones but the 3080 seems as popular as ever on the used market.

The best one, the ASUS TUF, does not fit my case by 1.6 mm. Debating if I should be thankful that my case prevents me from buying a bigger GPU that I don’t need or if I should cut the fan frame to stuff the big GPU in there, or just get a smaller model with a shitty cooler and zip-tie my Noctua fans to it, like I did with the 1080.

This AI price scare thingy isn’t helping

I was figuring I’d skip the current gen and sit with my 3070 until the next gen comes along. Whatever the “5070” or equivalent performance/range was.

I really don’t want another 8GB card and since I only spend a small part of my time simming I want good RT performance in my card. That limits my options to cards that are still close to or over $1000 and I’m just not going there.

It would really blow chunks if that never becomes possible.

We shrink down maverick, phoenix, bob, rooster and their jets using Wayne Szalinksi’s Shrink ray,

They then execute a low level bombing run using special bombs.

the 7900XTX was rushed, I wouldn’t,

I’d stick with the 3080 for a while longer.