Thought that was why you had Kai? ![]()

Wheels

Thought that was why you had Kai? ![]()

Wheels

He recently started picking up my mail…so we are getting there…

What??!! I am shocked and dismayed! If ED isn’t hiring MITs best and brightest for AI work, then who are they hiring?

AI is a pretty broad term nowadays, and for plain decision logic, games are a nice closed system, so a lot of research is actually done in that area (not because it is good for games, just because AI researchers look for a closed rule-based system and games provide that). A lot of ‘climbing’ algorithms were researched with games in mind and apply well outside of gaming.

AI research has done well in the last few years for stuff that approximates things; using neural networks for learning has allowed ‘fuzzy’ things like image and speech recognition (things that struggle with logical decision trees) to make the difference between the ‘training’ side and the ‘deciding’ side asymmetrical.

Put another way, the key algorithm discoveries of CNN and RNNs in the last ten years or so have allowed huge training networks to work out their network (using massively paralleled machines, i.e. GPUs) but then run the recognition side on small devices, i.e. an iPhone. So this allows for images to be recognized and speech to be decoded in places where previously it wasn’t possible. A way to think of it is we had breakthroughs in training and in deployment.

For gaming AI, neural networks don’t really help so much, in that the learnt training side isn’t based on teaching the DCS AI to work better using fuzzy things like images, it’s just plain ifs and or’s in a old decision logic tree. It would be fun to consider an AI pilot trained in a neural network just using the inputs of a screen. DARPA about 10 years ago spent a lot of money on it (allegedly). There’s all sorts of work like that with stuff like Mario learning to complete games. We’d just have to make the AI dogfight 100,000 times. ![]()

The worst part is that for me, AI that skips from A directly to Z without any fancy dancy A,B,C,D…Z learning algorithms leaves me with the same outcome since I suck at dogfighting so badly. ![]()

Yeah… I was joking. ![]()

But still, I would imagine that the AI logic of a wingman in an advanced flight sim isn’t the easiest place to start, if you are researching AI stuff.

Listned to a lecture about the F-35 in RNoAF, yesterday. Since they don’t make two seaters, a lot of the training will be done in sims.

They have eight sims, just here in Norway, for that purpose. The guy doing the lecture said that this enables tactical training, which they couldn’t do in the F-16 sims, that had no such capability. I.e. they had no enemy AI to fight.

What’s interesting is that they still don’t have AI enemies in the F-35 sims. They have to fight eachother, sim vs. sim and actually real aircraft vs. sim…!

There’s no point in training against an agent if that is not what you’re going to encounter in a real engagement. While the agent may or may not be superior to a human, it might react to situations in very unusual manners that a human never would.

I know where you’re coming from but i think the truth of that statement is now slowly eroding away.

In January Google’s Deepmind Studio published a blog article about their current approach for AlphaStar (an agent playing StarCraft II). They use a rather complex structure of RNNs and train it in an evolutional fashion against itself. It’s quite impressive from the ML-perspective, but of course one has to keep in mind that each agent they train is a one trick pony. It can do one strategy, for multiple strategies you need multiple agents. And it can only do PvP. And it can only do it on one map.

Taking all this into account it is still an amazing feat that AI can learn to play such a complex game as StarCraft at this level and given more time (and probably an even more complex topology and longer learning times) the AI can learn to choose and apply strategies. The building block (doing a single strategy well) is there.

I haven’t spent much time thinking about it but comparing StarCraft and DCS i would say that StarCraft is a much more complex set of “rules” than A2A in DCS.

True. The creativity and smarts of BFM lie not in the maneuvers themselves, but in working with the limitations of man and machine and most importantly recognizing and properly exploiting the opponents’ mistakes.

If flown perfectly, against a perfect opponent, it’s a very simple game. Boring almost. But against someone who makes a mistake, who might get angry or afraid, who loses perfect situational awareness, who’s had one G too many and now has lowered tolerance… That’s where interesting things start to happen.

So like all things in a sim, it’s not about making a machine that plays the game perfectly, its about making a machine that plays the game convincingly.

You have no idea how hard I had to fight while at the Military Flight Sim to have some people understand that.

Exactly.

And this is what’s so interesting considering all we’ve read about AI taking over the world…

And when we consider the average armchair pilots wishes regarding $50 game AI, it becomes pathetic. ![]()

Yep…even Skynet made a critical mistake and eventually lost. ![]()

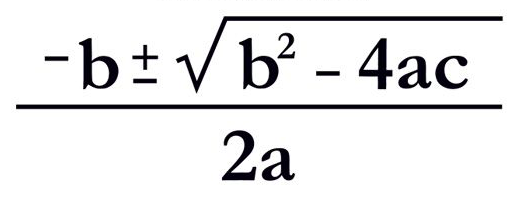

EDIT: Sometimes I wish I could take things more seriously. I actually took grad school course in AI. It all boiled down to Rule Sets, weighted averages, etc. If one definition of “Intelligence” is the ability to make intuitive decisions based on incomplete information, then there is no “I” in AI.

Yeah, I think you are right and I was off there. Things like the DOTA 2 AI clients are proving to be really capable, even in competitive PvP. The deployment side is getting efficient enough to put in the games as well one day.

Actually, thinking about it, I wrote about DCS AI before, now it rings a bell - The future evolution of Flight Simming. - #46 by fearlessfrog

I also agree with @schurem and @komemiute, in that it is easy to make a flawless opponent, but making a realistic one is harder. Also, making a fun opponent in a game using AI in certain games is pretty hard too. I suppose we could end up with ‘rubber band AI’ that just gets worse as it plays people ![]()

Isn’t that what our brains and neural nets do though? The ‘I’ in AI being true intelligence or not is getting more of a philosophical question rather than a math one. We might be a collection of heavily nested weighted averages trained on large sets of data. ![]()

Well sort of, machines are taking over some things because they lack some human traits, not despite.

I don’t think that we need cutting edge AI in DCS to be able to build proper missions. But I feel for mission makers that have to script around blaring deficiencies of the AI in their mission design. There’s people with unwarranted expectations, but there’s also people going daily head to head against some of the weakest points of the DCS franchise.

Whoa…that is truly deep…![]() like…like…I think therefore

like…like…I think therefore

(Need a Mudspike Philosopher Badge)

Sure.

I was talking about predictions like these ![]()

Me neither.

When rene descartes wrote that he specifically meant thinking as doubting. I doubt therefore I am.

It is easy to program a bunch of weighted averages to doubt the big blob of data its trained on, and the stuff coming in from its inputs. But it never says “i am”.

Therefore descartes was wrong.

All he needed to do was play sims as well… ![]()

Wasnt that what he was doing? ![]()

The good thing about philosophy is you can’t be wrong. As an elective if you needed a B grade you can’t go wrong…