Guys, I am really trying to come to grips with this. Here ia my dilema. I know that I can squeeze more performance out of my machine.

When Running DCS I can pull up this performance/fps tool. The game shows my GPU with only 10% overhead while your CPU is at 80%.

My Specs are:

7800X3D

ASUS TUF RTX 4070 Ti Super OC Edition 16GB

Gskill Flare X5 AMD Expo DDR5 64GB 6000mt/s

Is the GFX my bottle neck?

Don’t look at overall CPU load. DCS cannot and will not use all of your CPU cores fully. Even when CPU is the bottleneck, you’d probably only see one or two, maybe up to 3, logical cores (vCores/threads) maxed out. Not all 16 of them.

Your specs look more than fine. I wouldn’t worry. If you want to test if your CPU is bottlenecking, you could try increasing things that have virtually no CPU overhead and check if the FPS stays roughly the same. Things like AA, pixel density, resolution. If upping those causes lower framerates, you were perfectly balanced already.

Also, someone please split this to a separate topic.

What resolution are you running at (or, worse, do you use VR)? If you are using 4K or VR then yes - even a 4070 Ti is going to be working hard at anything more than the “VR” preset in the settings, in my experience.

That system should be running DCS smoothly at 1080p or 1440p with most settings turned up. Is that what you’re seeing when flying around at those sorts of resolutions on flatscreen?

SO Sorry @Torc ,

I did not specify. I bought a 2k monitor to make sure I maxed it out. In 2k DCS is Glorious. I play in VR. I have the first Pimax Crystal. Im seeing 80s (fps) but I vant more, VANT MORE. LOL

No, that’s OK - I’m sure you’ve told me in the past!

It sounds like your system is running well, I hear the Pimax is a bear to get enough performance to feed it well. If it’s any consolation, I’m having trouble with DCS on a Quest 2 with an old (now! It’s still my best GPU!) 3060 Ti… barely playable in VR online with the lowest settings…

Now i feel like the man w dirty shoes complaining… to the man w no feet

I just wish someone would actually produce some graphics cards so I can buy a new one ![]()

Nvidia trying really hard to get into GN’s 2025 disappointment build with this launch ![]()

I think GPUs are getting to the point, without something major, like a drastic Die increase and drastic power increase etc.

The rendering performance limit is essentially reached on the top level.

To the Point where, nVidia shoved more AI Cores in their GPUs and increased the amount of AI Generated Frames, and then changed how FpS is measured for marketing sake.

Perfect Opportunity for AMD to catch up, cause RTX6 is years away, and AMD has been trying to get RDNA 5 to a level that no one has seen, which is why high end was dropped from RDNA4, the high end GPUs simply weren’t ready.

High End on RDNA5, (and originally 4), was supposed to be 2(4?) GPU Clusters from the 2nd level (or 3rd?), on a GPU Based infinity Fabric,

So essentially BIG GPU (ie Large Die) Performance, at SMALL GPU (ie Small Die), Costs.

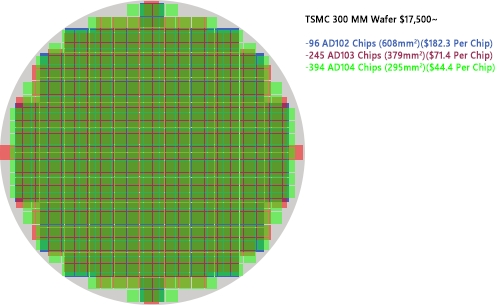

People don’t understand, the GB202-400 Die in the 5090, is 2x the size^2 of the 5080/70Ti, so per wafer, they get Base 75% Less GPU’s tapped out. and that’s not counting GPU’s that are lost to wafer defects. so each GPU produced on those wafers cost nearly 7x to 8x as the smaller ones, as the yields are significantly lower.

ie I made this a while back to illustrate:

The Lines used for fabricating the large GPU’s take time and wafers away from the small ones as well.

So, If AMD can get RDNA5 w/ the GPU Infinity Fabric Working Efficiently, they can Literally only have Mid-Tier Lines producing Say RDNA5-NAVI-52, which would produce 5-6x the yields due to die size, and less total units lost to defects from the batch, place 4 of them on a single Infinity fabric layer, allow individual GPU clusters to sleep (ie desktop mode, etc), then add things like HVEC-ENC Clusters etc separately,

The Same Way the Zen Core CPUs have been a drastic home run (might not show in Steam Hardware surveys, but the fact that AMD is running 1/4 the lines at TSMC because they build CPU Clusters and mount them to Infinity Fabric to creast 4-8-16-32-64 thread CPUs instead of separate die for each, then using common components (DDR Controller, etc etc etc) between all the chips, saved costs exponentially.

I love it when you talk dirty to us.

Internal SLI. Instead of packing more and more cores into a single chip, it’s blatantly obvious that making more small chips and linking them together saves costs in a number of ways.

The main issue is linking them together so the performance penalty going from two small chips to one double sized chip isn’t egregious.

SLI itself was abandoned when it became too much. There have been cards with 2 GPUs on them as well, but besides the space saving they weren’t much better. AMD’s way should be the best if they can make it work, although they still have to play catch up on the ray tracing side.

This is why RDNA4’s Navi 41 GPUs were Cancelled, the GPU Abstracted Infinity Fabric was not able to run at a clock high enough to sync the data and eliminate latency.

When I built my first gaming pc, the i5-4690K was a well-performing CPU at relatively low power. At the time, AMD had, IIRC, Bulldozer CPUs, with bad gaming performance huge power and they did indeed sound like construction vehicles.

But AMD turned it around with the Ryzen CPUs. The first two generations, while having stellar multicore productivity performance at high efficiency, were still mediocre for gaming, while Intel’s 9th gen reigned supreme. But with their third gen, Ryzen 5000, they started to beat Intel, and at much lower TDP. The 3D V-cache brought another huge boost to CPU gaming performance, but the chiplets were already winning. Intel seems mostly focused on laptops and productivity workloads now, with no attempt at making a gaming CPU this generation.

I remember when the R9 290X was the big die with many processors, huge memory bandwidth and matching power consumption, while the GTX 970 could match it in games due to higher clock speeds and smart tricks around memory access, and Nvidia’s higher cards easily beat it.

Despite all the Infinity Fabric and other architectural changes, AMD’s GPUs are still lagging behind Nvidia.

However, as Nvidia turns its focus to Machine Learning and Tensor Processing, AMD could bring us the same thing they did in CPUs.

I fully expect that like the first Ryzens (1000 to 3000), RDNA5 won’t be fully low-latency capable yet. This will remain an issue in VR. We have already seen this with the RX 7900 XTX, which doesn’t outperform the RX 6900 XT in VR, despite having more processing power.

But after a generation or two, this could be great. Whether Nvidia’s AI has gotten so good at imagining our games that we prefer that over raw performance by then, I don’t know. AMD has always been a generation behind Nvidia with raytracing but with the latest DLSS tech it seems like they are two generations behind.

Interesting to see where GPU tech will take us nonetheless. Moore’s Law is really dead, and the exciting part has started!

Did it? The gaming market just got dwarfed. Nobody is really trying. AI AI AI AI.

AMD is honest about it, and Nvidia is trying to fool us. But for gaming, they are kinda both aiming for the RTX 3060 mass market success.

Yeah you’re probably right. I shouldn’t submit long posts after I’ve had a few beers

Nono, please do ![]()

The developments are pretty exciting. Just not really for gamers, at least not as much as they hoped to.

I mean, frame gen is “nice”, but not the god-like thing they would like it to be.

Maybe the next GPUs will enable creation of better game AI at some point, so bots don’t feel like lobotomized idiots at some point in the future? Graphics are not everything, and MMO come with issues like cheating or anxiety. Or maybe they can frame-gen the sh*t out of old classics, so it’s cheap to relive old games in highres with natural face movement? The kind of games Damson is uploading videos of lately.

Just brainstorming…

I wish more old games would get the RTX treatment - Quake 2 RTX looks amazing even with the old low-poly models and low-res textures!

Bulldozer was horrible at EVERYTHING. Don’t Sugar coat it.

If I told you the reason why 7900XTX has such big latency issues, you’d be upset, It’s a small thing, but causes huge latency between chiplets.